Introduction to A/B Testing in Web Design

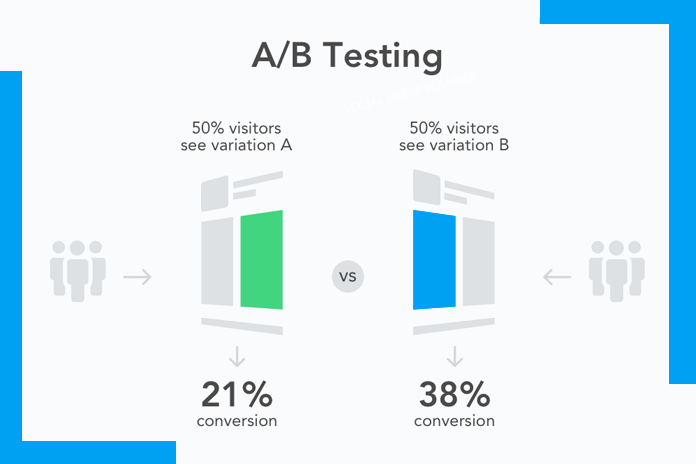

A/B testing, also known as split testing, is a powerful method used in web design to compare two versions of a webpage or interface to determine which one performs better. By presenting users with two different designs—often called the “A” and “B” versions—designers can analyze user interactions and make data-driven decisions that lead to improved engagement, higher conversion rates, and an overall better user experience (UX).

In the highly competitive online environment, where user preferences and behaviors can shift rapidly, A/B testing has become a cornerstone of effective web design strategies. This approach not only helps in optimizing design elements but also ensures that decisions are backed by real user data rather than assumptions.

Understanding the Fundamentals of A/B Testing

At its core, A/B testing involves comparing two variations of a web page to see which one achieves better results based on predefined metrics. The fundamental process includes:

- Creating a Hypothesis: Before starting a test, it’s crucial to establish a hypothesis about what change might lead to better performance. For example, you might hypothesize that changing a call-to-action (CTA) button’s color will lead to more clicks.

- Splitting Traffic: Users are randomly split into two groups, with one group seeing version “A” (the control) and the other seeing version “B” (the variation).

- Collecting Data: Performance metrics, such as click-through rates (CTR), bounce rates, and conversion rates, are tracked and compared between the two versions.

Different types of A/B tests include simple split testing, where only one element is changed, and more complex multivariate testing, which allows for testing multiple elements simultaneously.

The Importance of Data-Driven Decisions in Web Design

In modern web design, data is king. The days of making decisions based solely on intuition or aesthetic preferences are long gone. A/B testing empowers designers and marketers to rely on empirical evidence when making design changes. This data-driven approach not only enhances user experience but also directly impacts business outcomes like sales, lead generation, and user retention.

Key performance indicators (KPIs) like time on page, conversion rates, and engagement metrics guide these decisions. By continuously testing and refining, designers can create a seamless experience that aligns with user behavior and business objectives.

Setting Clear Goals and Objectives for A/B Testing

For A/B testing to be successful, it’s essential to set clear goals and objectives. Without a well-defined purpose, testing can lead to inconclusive results and wasted resources. Common goals in web design A/B tests include:

- Increasing Conversion Rates: Testing variations in forms, landing pages, or checkout processes.

- Enhancing User Engagement: Optimizing for time on site, interactions, and repeat visits.

- Reducing Bounce Rates: Testing headline changes, layout adjustments, or content modifications to retain visitors.

It’s important to align these goals with broader business objectives. For instance, if a company’s primary objective is to boost online sales, A/B tests should focus on optimizing elements like product pages and checkout flows.

Key Elements to Test in Web Design

In web design, almost every aspect of a webpage can be tested. Some of the most impactful elements include:

- Headlines and CTAs: These are often the first elements users interact with, making them critical for conversion.

- Layout and Design: Testing different layouts, such as grid versus list views, or single-column versus multi-column formats.

- Color Schemes and Fonts: Subtle changes in color or typography can have significant effects on user perception and engagement.

- Navigation Structure and User Flow: Testing the placement of menus, breadcrumbs, and links to improve user journey efficiency.

Testing these elements systematically allows designers to identify what resonates best with users and implement changes that enhance overall performance.

Steps to Conduct a Successful A/B Test

Running an A/B test requires careful planning and execution. The process typically involves the following steps:

- Pre-Test Planning: Define your hypothesis, objectives, and key metrics.

- Audience Segmentation: Determine how to split your audience (e.g., 50/50, 70/30) and ensure the sample size is large enough for meaningful results.

- Running the Test: Implement the A/B test using tools like Google Optimize, Optimizely, or VWO.

- Analyzing the Results: Once the test is complete, evaluate the data to see which version performed better and whether the results are statistically significant.

Tools and Platforms for A/B Testing

A variety of tools are available for conducting A/B tests, each offering unique features and integrations. Some of the most popular include:

- Google Optimize: A free tool that integrates seamlessly with Google Analytics for basic testing needs.

- Optimizely: Known for its robust features, Optimizely offers multivariate testing and advanced targeting options.

- VWO (Visual Website Optimizer): Offers heatmaps, user recordings, and A/B testing to provide comprehensive insights.

Choosing the right tool depends on your budget, technical capabilities, and testing requirements. Integration with existing analytics platforms and CMS (Content Management System) is also a key consideration.

Best Practices for A/B Testing in Web Design

To maximize the effectiveness of your A/B tests, follow these best practices:

- Test One Variable at a Time: Isolate changes to ensure you know exactly what’s influencing the results.

- Ensure Statistical Significance: Don’t draw conclusions too early; wait until the data reaches a 95% confidence level.

- Avoid Common Pitfalls: Watch out for biases, such as testing during holidays or periods of abnormal traffic.

These practices ensure that your test results are reliable and actionable.

Case Studies: Successful A/B Testing in Web Design

Numerous companies have seen significant improvements through A/B testing. For example:

- An eCommerce Brand: By testing different product page layouts, a company saw a 20% increase in purchases.

- A SaaS Company: Improved sign-up rates by 15% after experimenting with different headline variations on their landing page.

Such case studies highlight how even small design tweaks can lead to substantial business gains when backed by data.

Common Challenges in A/B Testing

Despite its benefits, A/B testing comes with challenges, such as:

- Sample Size Limitations: Smaller websites may struggle to get statistically significant results due to low traffic.

- Testing Duration and Timing: Running tests during peak traffic times or unusual events (e.g., sales) can skew results.

- Mixed or Inconclusive Results: When results are unclear, it may require additional testing or re-evaluating your hypothesis.

Addressing these challenges involves careful planning and adapting your testing strategy based on the context.

Ethical Considerations in A/B Testing

Ethics play a crucial role in A/B testing, especially regarding:

- User Privacy and Data Security: Ensure that user data is anonymized and securely handled.

- Transparency and User Consent: Users should be aware if they’re part of an experiment, especially in sensitive scenarios.

- Ethical Dilemmas in Testing Sensitive Content: Avoid testing changes that could negatively impact vulnerable user groups.

Balancing innovation with ethical responsibility ensures your tests build trust rather than harm your reputation.

Integrating A/B Testing into the Design Process

To fully leverage A/B testing, it should be integrated into the overall design workflow. This includes:

- Continuous Improvement: Use testing insights to make iterative design updates.

- Cross-Department Collaboration: Designers, developers, and marketers must work together to align on objectives and interpret results.

- Strategic Design Refinement: A/B testing should inform larger design strategies and not just be limited to minor tweaks.

A culture of continuous testing and learning drives long-term success.

Measuring the ROI of A/B Testing

Understanding the return on investment (ROI) from A/B testing is essential for justifying its ongoing use. Key considerations include:

- Calculating Financial Impact: Measure how improved conversion rates translate into revenue gains.

- Weighing Costs Versus Benefits: Factor in the costs of tools, time, and resources against the benefits achieved.

- Long-Term Value: Consider how lessons learned from A/B testing contribute to long-term design excellence.

When done right, the ROI from A/B testing often far exceeds the initial investment.

Future Trends in A/B Testing for Web Design

A/B testing is evolving with new trends and technologies:

- AI and Machine Learning: Automated testing tools that dynamically adjust based on user behavior patterns.

- Personalization Through Dynamic A/B Tests: Delivering personalized experiences by testing multiple versions for different audience segments.

- No-Code A/B Testing Tools: Making it easier for non-technical teams to run sophisticated tests without writing code.

These trends point to a future where A/B testing becomes more accessible and effective.

FAQs

- What is the ideal sample size for an A/B test?

A sample size should be large enough to achieve statistical significance, typically requiring at least a few thousand visitors. - How long should I run an A/B test?

Run tests until you reach statistical significance, which usually takes at least one to two weeks depending on traffic volume. - Can A/B testing be applied to mobile apps?

Yes, A/B testing is commonly used in mobile app design to optimize features like onboarding flows and in-app purchases. - What happens if the test results are inconclusive?

Inconclusive results may indicate that the change had minimal impact, requiring either a new hypothesis or additional testing. - How do I prioritize which elements to test first?

Prioritize high-impact elements like CTAs, landing pages, and navigation menus that directly affect user actions. - Is A/B testing suitable for small websites?

Yes, but smaller sites should focus on high-traffic pages and plan for longer testing periods to gather sufficient data.

Read More: How to Optimize Web Design for Speed and Performance